Setting Up a Hadoop 3.3.6 Multi-Node Cluster with WSL2, Docker, and Docker Compose

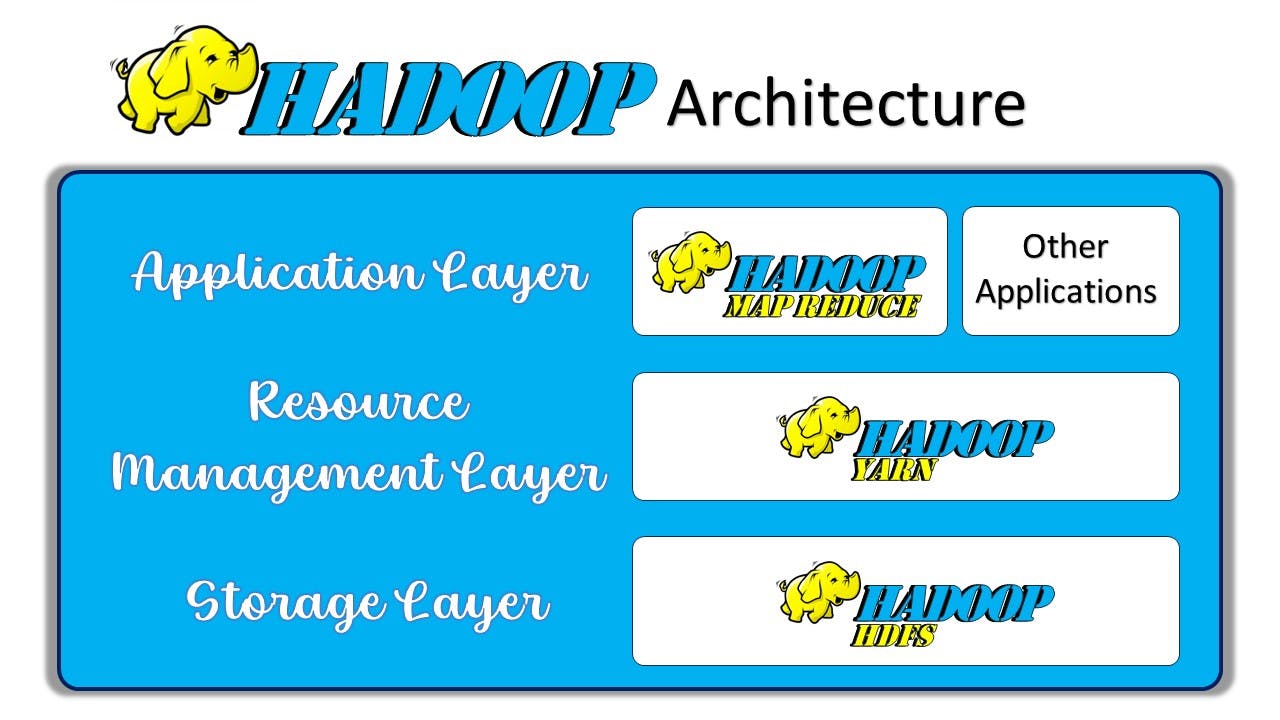

Greetings, fellow data enthusiasts! Today, we embark on a thrilling voyage into the realm of Hadoop, the cornerstone of big data processing. Let's first understand Hadoop and its architecture.

What is Hadoop?

Hadoop is an open-source framework designed for distributed storage and processing large data sets across clusters of commodity hardware. Developed by the Apache Software Foundation, Hadoop is known for its scalability, fault tolerance, and high-throughput data processing capabilities.

hadoop architecture diagram

Here are the core components that make up the Hadoop ecosystem:

- Hadoop Distributed File System (HDFS): A distributed file system that stores data across multiple machines, providing high availability and reliability. HDFS splits files into large blocks and distributes them across nodes in the cluster, replicating each block for fault tolerance.

- MapReduce: A programming model and processing engine for large-scale data processing. MapReduce enables developers to write programs that process large amounts of data in parallel across a Hadoop cluster by dividing the workload into two main tasks: the 'Map' task (which processes and filters data) and the 'Reduce' task (which aggregates the results).

- YARN (Yet Another Resource Negotiator): A resource management layer that schedules and manages resources across the Hadoop cluster. YARN allows multiple data processing engines, such as batch, interactive, and real-time processing, to run and manage their workloads simultaneously on a single platform.

- Hadoop Common: A set of shared utilities and libraries supporting other Hadoop components. These utilities provide necessary services like file systems and OS-level abstractions.

Hadoop's architecture allows for horizontal scaling, meaning you can add more nodes to the cluster to increase processing power and storage capacity. Its design for handling failures and its ability to process large volumes of data make it a preferred choice for big data analytics and storage solutions in industries ranging from finance to healthcare to technology.

Before we delve into the technical intricacies, let's ensure our environment is primed for the adventure ahead. Firstly, ensure you have WSL2 installed on your Windows machine. WSL2 provides a Linux kernel interface for running Linux distributions natively on Windows. Next, let's set sail into the world of containers with Docker. Docker simplifies the deployment process by encapsulating applications and their dependencies into lightweight containers. Lastly, ensure you have Docker Compose installed. Docker Compose allows us to define multi-container applications in a single file, making orchestration a breeze.

Ahoy, mates! With our environment ready, let's hoist the sails and embark on configuring our Hadoop cluster. Let's first setup the project structure.

We will follow the below architecture for our setup:

The project structure looks like this:

env-setup

|_ conf

|_ core-site.xml

|_ hdfs-site.xml

|_ mapred-site.xml

|_ yarn-site.xml

|_ datanode

|_ Dockerfile

|_ run.sh

|_ historyserver

|_ Dockerfile

|_ run.sh

|_ namenode

|_ Dockerfile

|_ run.sh

|_ nodemanager

|_ Dockerfile

|_ run.sh

|_ resourcemanager

|_ Dockerfile

|_ run.sh

|_ Dockerfile

|_ docker-compose.yml

|_ start-cluster.shLet's start with the conf folder. In the intricate ecosystem of Hadoop, configuration files serve as the blueprint for orchestrating its various components, ensuring they work harmoniously to process and manage data efficiently. Think of them as the guiding principles that dictate how Hadoop behaves within a cluster environment.

core-site.xml

This configuration file lays the foundation for Hadoop's file system operations. Here, critical parameters like fs.defaultFS define the default file system URI, essentially the home base for Hadoop's data storage. It's akin to setting the GPS coordinates for navigating through the data landscape.

1<?xml version="1.0" encoding="UTF-8"?> 2<?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3<!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15--> 16 17<!-- Put site-specific property overrides in this file. --> 18 19<configuration> 20 <property> 21 <name>fs.defaultFS</name> 22 <value>hdfs://namenode:8020</value> 23 <description>Where HDFS NameNode can be found on the network</description> 24 </property> 25 26 <property> 27 <name>hadoop.http.staticuser.user</name> 28 <value>root</value> 29 <description>Whose can view the logs in the container application</description> 30 </property> 31 32 <property> 33 <name>io.compression.codecs</name> 34 <value>org.apache.hadoop.io.compress.SnappyCodec</value> 35 </property> 36</configuration>

1. fs.defaultFS

- Purpose: This property defines the default file system URI for Hadoop.

- Value: hdfs://namenode:8020hdfs:// specifies that the default file system is Hadoop Distributed File System (HDFS).namenode is the hostname of the NameNode, which is the central metadata server in an HDFS cluster.8020 is the default port on which the NameNode listens for client connections.

- Description: Indicates where the HDFS NameNode can be found on the network. It's crucial for clients to know the location of the NameNode to access the HDFS file system.

2. hadoop.http.staticuser.user

- Purpose: Specifies the user account used to access certain resources, particularly the logs in the container application.

- Value: rootIn this case, the logs in the container application can be viewed by the user with the username root.

- Description: Specifies the user who can view the logs in the container application. This property is particularly relevant in environments where security and access control are paramount.

3. io.compression.codecs

- Purpose: Defines the list of compression codecs available for use in Hadoop.

- Value: org.apache.hadoop.io.compress.SnappyCodecSnappyCodec is a compression codec provided by Hadoop, known for its fast compression and decompression speeds.

- Description: Specifies the compression codecs that Hadoop can utilize when reading or writing data. Compression is often used in Hadoop to reduce storage space and improve data transfer speeds.

hdfs-site.xml

Hadoop's Distributed File System (HDFS) finds its directives within this file. Parameters such as dfs.replication specify the number of times data blocks are replicated across the cluster, ensuring fault tolerance and data redundancy. Additionally, it outlines the directory paths for the NameNode and DataNode, crucial components responsible for managing metadata and storing data blocks, respectively.

1<?xml version="1.0" encoding="UTF-8"?> 2<?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3<!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15--> 16 17<!-- Put site-specific property overrides in this file. --> 18 19<configuration> 20 <property> 21 <name>dfs.webhdfs.enabled</name> 22 <value>true</value> 23 </property> 24 25 <property> 26 <name>dfs.permissions.enabled</name> 27 <value>false</value> 28 </property> 29 30 <property> 31 <name>dfs.permissions</name> 32 <value>false</value> 33 </property> 34 35 <property> 36 <name>dfs.namenode.name.dir</name> 37 <value>/hadoop/dfs/name</value> 38 </property> 39 40 <property> 41 <name>dfs.datanode.data.dir</name> 42 <value>/hadoop/dfs/data</value> 43 </property> 44 45 <property> 46 <name>dfs.namenode.datanode.registration.ip-hostname-check</name> 47 <value>false</value> 48 </property> 49 50 <!-- Allow multihomed network for security, availability and performance--> 51 <property> 52 <name>dfs.namenode.rpc-bind-host</name> 53 <value>0.0.0.0</value> 54 <description> 55 controls what IP address the NameNode binds to. 56 0.0.0.0 means all available. 57 </description> 58 </property> 59 60 <property> 61 <name>dfs.namenode.servicerpc-bind-host</name> 62 <value>0.0.0.0</value> 63 <description> 64 controls what IP address the NameNode binds to. 65 0.0.0.0 means all available. 66 </description> 67 </property> 68 69 <property> 70 <name>dfs.namenode.http-bind-host</name> 71 <value>0.0.0.0</value> 72 <description> 73 controls what IP address the NameNode binds to. 74 0.0.0.0 means all available. 75 </description> 76 </property> 77 78 <property> 79 <name>dfs.namenode.https-bind-host</name> 80 <value>0.0.0.0</value> 81 <description> 82 controls what IP address the NameNode binds to. 83 0.0.0.0 means all available. 84 </description> 85 </property> 86 87 <property> 88 <name>dfs.client.use.datanode.hostname</name> 89 <value>true</value> 90 <description> 91 Whether clients should use datanode hostnames when 92 connecting to datanodes. 93 </description> 94 </property> 95 96 <property> 97 <name>dfs.datanode.use.datanode.hostname</name> 98 <value>true</value> 99 <description> 100 Whether datanodes should use datanode hostnames when 101 connecting to other datanodes for data transfer. 102 </description> 103 </property> 104</configuration>

1. dfs.webhdfs.enabled:

- Enables or disables the WebHDFS feature, which allows HDFS operations over HTTP.Value: true.

2. dfs.permissions.enabled and dfs.permissions:

- These properties control HDFS permissions.dfs.permissions.enabled specifies whether permissions are enabled in HDFS (value: false).dfs.permissions is deprecated but retained here with the same value.

3. dfs.namenode.name.dir and dfs.datanode.data.dir:

- Specifies the directory paths where the NameNode and DataNode store their data, respectively.

4. dfs.namenode.datanode.registration.ip-hostname-check:

- Determines whether the NameNode verifies that DataNodes register with IP addresses that match their hostname.

5. Host Binding Properties:

- Properties like dfs.namenode.rpc-bind-host, dfs.namenode.servicerpc-bind-host, dfs.namenode.http-bind-host, and dfs.namenode.https-bind-host control the IP addresses to which the NameNode binds for various services.

6. dfs.client.use.datanode.hostname and dfs.datanode.use.datanode.hostname:

- These properties determine whether clients and DataNodes should use datanode hostnames when connecting to each other for data transfer.

mapred-site.xml

As the heart of Hadoop's data processing engine, MapReduce relies on configurations defined in this file. mapreduce.framework.name determines the framework used for job execution, whether it's the classic MapReduce paradigm or newer alternatives like Apache Tez or Apache Spark.

1<?xml version="1.0" encoding="UTF-8"?> 2<?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3<!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15--> 16 17<!-- Put site-specific property overrides in this file. --> 18 19<configuration> 20 <property> 21 <name>mapreduce.framework.name</name> 22 <value>yarn</value> 23 <description> 24 How hadoop execute the job, use yarn to execute the job 25 </description> 26 </property> 27 28 <property> 29 <name>mapred_child_java_opts</name> 30 <value>-Xmx4096m</value> 31 </property> 32 33 <property> 34 <name>mapreduce.map.memory.mb</name> 35 <value>4096</value> 36 </property> 37 38 <property> 39 <name>mapreduce.reduce.memory.mb</name> 40 <value>8192</value> 41 </property> 42 43 <property> 44 <name>mapreduce.map.java.opts</name> 45 <value>-Xmx3072m</value> 46 </property> 47 48 <property> 49 <name>mapreduce.reduce.java.opts</name> 50 <value>-Xmx6144m</value> 51 </property> 52 53 <property> 54 <name>yarn.app.mapreduce.am.env</name> 55 <value>HADOOP_MAPRED_HOME=/opt/hadoop-3.3.6/</value> 56 <description> 57 Environment variable where MapReduce job will be processed 58 </description> 59 </property> 60 61 <property> 62 <name>mapreduce.map.env</name> 63 <value>HADOOP_MAPRED_HOME=/opt/hadoop-3.3.6/</value> 64 </property> 65 66 <property> 67 <name>mapreduce.reduce.env</name> 68 <value>HADOOP_MAPRED_HOME=/opt/hadoop-3.3.6/</value> 69 </property> 70 71 <!-- Allow multihomed network for security, availability and performance--> 72 <property> 73 <name>yarn.nodemanager.bind-host</name> 74 <value>0.0.0.0</value> 75 </property> 76</configuration>

1. mapreduce.framework.name:

- Specifies the framework used for executing MapReduce jobs. Here, it's set to yarn, indicating that YARN is utilized as the resource manager for job execution.

2. mapred_child_java_opts, mapreduce.map.memory.mb, mapreduce.reduce.memory.mb, mapreduce.map.java.opts, mapreduce.reduce.java.opts:

- These properties define memory-related settings for MapReduce tasks, such as the maximum heap size (Xmx) for map and reduce tasks.

3. yarn.app.mapreduce.am.env, mapreduce.map.env, mapreduce.reduce.env:

- These properties define environment variables for MapReduce tasks, particularly the HADOOP_MAPRED_HOME variable, which specifies the directory where MapReduce libraries and binaries are located.

4. yarn.nodemanager.bind-host:

- Specifies the IP address to which the NodeManager binds for communication. Setting it to 0.0.0.0 means it binds to all available IP addresses.

yarn-site.xml

YARN, the resource management layer of Hadoop, finds its directives here. Parameters like yarn.resourcemanager.hostname specify the hostname for the ResourceManager, the central authority responsible for allocating resources across the cluster. It also defines auxiliary services like the Shuffle service, essential for data exchange between Map and Reduce tasks.

1<?xml version="1.0" encoding="UTF-8"?> 2<?xml-stylesheet type="text/xsl" href="configuration.xsl"?> 3<!-- 4 Licensed under the Apache License, Version 2.0 (the "License"); 5 you may not use this file except in compliance with the License. 6 You may obtain a copy of the License at 7 8 http://www.apache.org/licenses/LICENSE-2.0 9 10 Unless required by applicable law or agreed to in writing, software 11 distributed under the License is distributed on an "AS IS" BASIS, 12 WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 13 See the License for the specific language governing permissions and 14 limitations under the License. See accompanying LICENSE file. 15--> 16 17<!-- Put site-specific property overrides in this file. --> 18 19<configuration> 20 <property> 21 <name>yarn.log-aggregation-enable</name> 22 <value>true</value> 23 <description> 24 Log aggregation collects each container's logs and 25 moves these logs onto a file-system 26 </description> 27 </property> 28 29 <property> 30 <name>yarn.log.server.url</name> 31 <value>http://historyserver:8188/applicationhistory/logs/</value> 32 <description> 33 URL for log aggregation server 34 </description> 35 </property> 36 37 <property> 38 <name>yarn.resourcemanager.recovery.enabled</name> 39 <value>true</value> 40 </property> 41 42 <property> 43 <name>yarn.resourcemanager.store.class</name> 44 <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore</value> 45 </property> 46 47 <property> 48 <name>yarn.resourcemanager.scheduler.class</name> 49 <value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler</value> 50 </property> 51 52 <property> 53 <name>yarn.scheduler.capacity.root.default.maximum-allocation-mb</name> 54 <value>8192</value> 55 </property> 56 57 <property> 58 <name>yarn.scheduler.capacity.root.default.maximum-allocation-vcores</name> 59 <value>4</value> 60 </property> 61 62 <property> 63 <name>yarn.resourcemanager.fs.state-store.uri</name> 64 <value>/rmstate</value> 65 </property> 66 67 <property> 68 <name>yarn.resourcemanager.system-metrics-publisher.enabled</name> 69 <value>true</value> 70 </property> 71 72 <property> 73 <name>yarn.resourcemanager.hostname</name> 74 <value>resourcemanager</value> 75 </property> 76 77 <property> 78 <name>yarn.resourcemanager.address</name> 79 <value>resourcemanager:8032</value> 80 </property> 81 82 <property> 83 <name>yarn.resourcemanager.scheduler.address</name> 84 <value>resourcemanager:8030</value> 85 </property> 86 87 <property> 88 <name>yarn.resourcemanager.resource-tracker.address</name> 89 <value>resourcemanager:8031</value> 90 </property> 91 92 <property> 93 <name>yarn.timeline-service.enabled</name> 94 <value>true</value> 95 </property> 96 97 <property> 98 <name>yarn.timeline-service.generic-application-history.enabled</name> 99 <value>true</value> 100 </property> 101 102 <property> 103 <name>yarn.timeline-service.hostname</name> 104 <value>historyserver</value> 105 </property> 106 107 <property> 108 <name>mapreduce.map.output.compress</name> 109 <value>true</value> 110 </property> 111 112 <property> 113 <name>mapred.map.output.compress.codec</name> 114 <value>org.apache.hadoop.io.compress.SnappyCodec</value> 115 </property> 116 117 <property> 118 <name>yarn.nodemanager.resource.memory-mb</name> 119 <value>16384</value> 120 </property> 121 122 <property> 123 <name>yarn.nodemanager.resource.cpu-vcores</name> 124 <value>8</value> 125 </property> 126 127 <property> 128 <name>yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentage</name> 129 <value>98.5</value> 130 </property> 131 132 <property> 133 <name>yarn.nodemanager.remote-app-log-dir</name> 134 <value>/app-logs</value> 135 </property> 136 137 <property> 138 <name>yarn.nodemanager.aux-services</name> 139 <value>mapreduce_shuffle</value> 140 </property> 141 142 <property> 143 <name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name> 144 <value>org.apache.hadoop.mapred.ShuffleHandler</value> 145 </property> 146 147 <!-- Allow multihomed network for security, availability and performance--> 148 <property> 149 <name>yarn.resourcemanager.bind-host</name> 150 <value>0.0.0.0</value> 151 </property> 152 153 <property> 154 <name>yarn.nodemanager.bind-host</name> 155 <value>0.0.0.0</value> 156 </property> 157 158 <property> 159 <name>yarn.timeline-service.bind-host</name> 160 <value>0.0.0.0</value> 161 </property> 162</configuration>

1. yarn.log-aggregation-enable:

- Enables or disables log aggregation, which collects each container's logs and moves them to a file system.Value: true.

2. yarn.log.server.url:

- Specifies the URL for the log aggregation server where logs are stored.Value: http://historyserver:8188/applicationhistory/logs/.

3. yarn.resourcemanager.recovery.enabled and yarn.resourcemanager.store.class:

- These properties control ResourceManager recovery functionality and specify the class for the state store.yarn.resourcemanager.recovery.enabled is set to true.yarn.resourcemanager.store.class specifies the class for the ResourceManager state store.

4. yarn.resourcemanager.scheduler.class:

- Specifies the class for the ResourceManager scheduler, which determines how resources are allocated among applications.Value: org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler.

5. yarn.scheduler.capacity.root.default.maximum-allocation-mb and yarn.scheduler.capacity.root.default.maximum-allocation-vcores:

- These properties define the maximum allocation of memory and virtual cores for each application in the default root queue of the CapacityScheduler.

Moving ahead to create Dockerfile for namenode, datanode, historyserver, resourcemanager and nodemanager.

1. Namenode

Let's start with the name node. The NameNode is the central component of the Hadoop Distributed File System (HDFS), acting as the primary manager and coordinator of data storage and retrieval within the Hadoop cluster. It serves as a crucial entity in the architecture of Hadoop, responsible for maintaining metadata about the files and directories stored in the distributed file system.

To create a namenode, we can create a file named Dockerfile inside namenode directory.

1FROM hadoop-base:3.3.6 2 3HEALTHCHECK CMD curl -f http://localhost:9870/ || exit 1 4 5ENV HDFS_CONF_dfs_namenode_name_dir=file:///hadoop/dfs/name 6RUN mkdir -p /hadoop/dfs/name 7VOLUME /hadoop/dfs/name 8 9ADD run.sh /run.sh 10RUN chmod a+x /run.sh 11 12EXPOSE 9870 13 14CMD ["/run.sh"]

This Dockerfile defines a containerized environment for a Hadoop NameNode based on the hadoop-base:3.3.6 image. It sets up data persistence by creating a directory for storing NameNode metadata, exposes port 9870 for web UI access, and includes a health check to ensure the NameNode service's availability. Additionally, it copies and makes executable a script named run.sh, responsible for starting the NameNode service upon container startup.

The run.sh shell script contains these lines of code :

1#!/bin/bash 2 3# Check if namenode dir exists 4namedir=`echo $HDFS_CONF_dfs_namenode_name_dir | perl -pe 's#file://##'` 5if [ ! -d $namedir ]; then 6 echo "Namenode name directory not found: $namedir" 7 exit 2 8fi 9 10# check whether clustername specified or not 11# we setup clustername in docker-compose 12if [ -z "$CLUSTER_NAME" ]; then 13 echo "Cluster name not specified" 14 exit 2 15fi 16 17# remove some unused files from namenode directory 18echo "remove lost+found from $namedir" 19rm -r $namedir/lost+found 20 21# format namenode dir if there is no files or directory exists in it 22if [ "`ls -A $namedir`" == "" ]; then 23 echo "Formatting namenode name directory: $namedir" 24 $HADOOP_HOME/bin/hdfs --config $HADOOP_CONF_DIR namenode -format $CLUSTER_NAME 25fi 26 27# kick-off namenode server 28$HADOOP_HOME/bin/hdfs --config $HADOOP_CONF_DIR namenode

This bash script is designed to be executed within a Docker container to manage the initialization and startup of a Hadoop NameNode service. Here's a breakdown of its functionality:

- It extracts the value of the HDFS_CONF_dfs_namenode_name_dir environment variable, which likely specifies the directory where the NameNode stores its metadata. It removes the file:// prefix from the directory path.

- It checks if the specified NameNode directory exists. If not, it prints an error message and exits with a non-zero status code.

- It checks if the CLUSTER_NAME environment variable is set, which is typically configured in the Docker Compose file for the Hadoop cluster. If not set, it prints an error message and exits.

- It removes the lost+found directory from the NameNode directory, as it's typically unnecessary and can cause issues.

- It checks if the NameNode directory is empty. If it's empty, it formats the NameNode directory using the hdfs namenode -format command with the specified cluster name.

- Finally, it starts the NameNode server using the hdfs namenode command with the specified configuration directory. This command initiates the NameNode process, making it ready to serve requests from the Hadoop cluster.

Overall, this script ensures that the NameNode directory is properly initialized and formatted before starting the NameNode service, ensuring the reliability and integrity of the Hadoop file system.

2. Datanode

Next move to data node. The DataNodes are integral components of the Hadoop ecosystem, responsible for storing and managing data within the Hadoop Distributed File System (HDFS). They handle the actual storage of data blocks across multiple storage devices, replicate data to ensure fault tolerance, and regularly communicate with the NameNode to report their health status and the data blocks they store. DataNodes facilitate data retrieval and streaming operations, play a crucial role in data replication strategies for fault tolerance, and are essential for the scalability and reliability of Hadoop clusters.

1FROM hadoop-base:3.3.6 2 3HEALTHCHECK CMD curl -f http://localhost:9864/ || exit 1 4 5ENV HDFS_CONF_dfs_datanode_data_dir=file:///hadoop/dfs/data 6RUN mkdir -p /hadoop/dfs/data 7VOLUME /hadoop/dfs/data 8 9ADD run.sh /run.sh 10RUN chmod a+x /run.sh 11 12EXPOSE 9864 13 14CMD ["/run.sh"]

This Dockerfile sets up a container environment for a Hadoop DataNode based on the hadoop-base:3.3.6 image. It defines a health check to ensure the availability of the DataNode service, exposes port 9864 for potential access, and specifies a default command to execute when the container starts, likely initiating the DataNode service. Additionally, it configures environment variables, such as HDFS_CONF_dfs_datanode_data_dir, to specify the directory for storing DataNode data and ensures the creation of necessary directories for data storage. Lastly, it includes a script run.sh to manage the execution of the DataNode service and sets permissions accordingly.

The run.sh shell script contains these lines of code :

1#!/bin/bash 2 3# Check if datanode data dir exists 4datadir=`echo $HDFS_CONF_dfs_datanode_data_dir | perl -pe 's#file://##'` 5if [ ! -d $datadir ]; then 6 echo "Datanode data directory not found: $datadir" 7 exit 2 8fi 9 10# if yes run this code to kick-off datanode 11$HADOOP_HOME/bin/hdfs --config $HADOOP_CONF_DIR datanode

This bash script is designed to be executed within a Docker container to manage the initialization and startup of a Hadoop DataNode service. Here's a breakdown of its functionality:

- It extracts the value of the HDFS_CONF_dfs_datanode_data_dir environment variable, which likely specifies the directory where the DataNode stores its data. It removes the file:// prefix from the directory path.

- It checks if the specified DataNode directory exists. If not, it prints an error message and exits with a non-zero status code.

- If the DataNode directory exists, it executes the command to start the DataNode service using the hdfs datanode command with the specified configuration directory ($HADOOP_CONF_DIR).

Overall, this script ensures that the DataNode directory is properly initialized before starting the DataNode service, ensuring the reliability and integrity of the Hadoop file system.

3. History Server

Next we have history server. The History Server, a pivotal component in the Hadoop ecosystem, serves as a centralized repository for storing and accessing historical information about completed MapReduce jobs. It retains crucial details including job configurations, start and end times, status updates, counters, and task-level data, enabling users and administrators to analyze job performance, resource utilization, and execution patterns. With its web-based user interface, the History Server provides an intuitive platform for searching, viewing, and scrutinizing job histories, fostering performance optimization and troubleshooting efforts. By ensuring fault tolerance and seamless integration with other Hadoop ecosystem tools, it enhances the efficiency, reliability, and manageability of big data processing workflows.

1FROM hadoop-base:3.3.6 2 3HEALTHCHECK CMD curl -f http://localhost:8188/ || exit 1 4 5ENV YARN_CONF_yarn_timeline___service_leveldb___timeline___store_path=/hadoop/yarn/timeline 6RUN mkdir -p /hadoop/yarn/timeline 7VOLUME /hadoop/yarn/timeline 8 9ADD run.sh /run.sh 10RUN chmod a+x /run.sh 11 12EXPOSE 8188 13 14CMD ["/run.sh"]

This Dockerfile configures a container environment for a Hadoop YARN Timeline Server based on the hadoop-base:3.3.6 image. It defines a health check to ensure the availability of the Timeline Server, exposes port 8188 for potential access, and specifies a default command to execute when the container starts, likely initiating the Timeline Server service. Additionally, it sets up environment variables, such as YARN_CONF_yarn_timeline___service_leveldb___timeline___store_path, to specify the path for storing timeline data and ensures the creation of necessary directories for data storage. Lastly, it includes a script run.sh to manage the execution of the Timeline Server service and sets permissions accordingly.

The run.sh shell script contains these lines of code :

1#!/bin/bash 2 3# Kick-off yarn server 4$HADOOP_HOME/bin/yarn --config $HADOOP_CONF_DIR historyserver

This bash script is designed to start the YARN History Server within a Hadoop environment. Here is an explanation of its functionality:

- Kick-off YARN History Server: The script contains a single command that initiates the YARN History Server. This is done using the yarn command-line tool provided by Hadoop.

- Configuration Directory: The --config $HADOOP_CONF_DIR option specifies the directory where the Hadoop configuration files are located. This ensures that the YARN History Server uses the appropriate configurations for its operation.

- History Server Command: The historyserver argument passed to the yarn command tells YARN to start the History Server, which is responsible for maintaining and serving information about completed YARN applications, such as MapReduce jobs.

In summary, this script simply starts the YARN History Server using the provided Hadoop configuration directory, enabling users to access historical data about completed YARN applications.

4. Resource Manager

Next is resource manager. The Resource Manager is a crucial component of the Hadoop YARN (Yet Another Resource Negotiator) architecture, responsible for managing and allocating cluster resources to various applications running in the Hadoop ecosystem. It consists of two main components: the Scheduler, which allocates resources based on resource availability and application requirements, and the Application Manager, which manages application life cycles from submission to completion. By coordinating resource usage across the cluster, the Resource Manager ensures efficient utilization and prevents resource contention, thereby enhancing the overall performance and scalability of the Hadoop environment.

1FROM hadoop-base:3.3.6 2 3HEALTHCHECK CMD curl -f http://localhost:8088/ || exit 1 4 5ADD run.sh /run.sh 6RUN chmod a+x /run.sh 7 8EXPOSE 8088 9 10CMD ["/run.sh"]

This Dockerfile sets up a container for the Hadoop YARN Resource Manager using the hadoop-base:3.3.6 image. It includes a health check to verify the Resource Manager's web interface on port 8088, ensuring the service is running correctly. The run.sh script, added to the container and made executable, is used to start the Resource Manager. The container exposes port 8088 for access to the Resource Manager's web interface.

The run.sh shell script contains these lines of code :

1#!/bin/bash 2 3$HADOOP_HOME/bin/yarn --config $HADOOP_CONF_DIR resourcemanager

$HADOOP_HOME/bin/yarn: This command invokes the yarn script located in the bin directory of the Hadoop installation. $HADOOP_HOME is an environment variable that should be set to the root directory of the Hadoop installation.--config $HADOOP_CONF_DIR: This option specifies the configuration directory to be used by the YARN command. $HADOOP_CONF_DIR is an environment variable that points to the directory containing Hadoop's configuration files.resourcemanager: This argument tells the yarn command to start the Resource Manager, which is a key component in the YARN architecture responsible for managing and allocating cluster resources.

5. Node Manager

The Node Manager is a crucial component of the Hadoop YARN architecture, responsible for managing individual nodes in a Hadoop cluster. It monitors resource usage (CPU, memory, disk) of the containers running on each node and reports this information to the Resource Manager. The Node Manager also handles the execution of tasks, manages their life cycle, and oversees their resource allocation. By ensuring efficient resource utilization and providing localized management, the Node Manager contributes to the overall stability and performance of the Hadoop cluster.

1FROM hadoop-base:3.3.6 2 3HEALTHCHECK CMD curl -f http://localhost:8042/ || exit 1 4 5ADD run.sh /run.sh 6RUN chmod a+x /run.sh 7 8EXPOSE 8042 9 10CMD ["/run.sh"]

This Dockerfile sets up a container environment for running a Hadoop YARN Node Manager, based on the hadoop-base:3.3.6 image. Here's a breakdown of its components:

- Base Image: FROM hadoop-base:3.3.6 specifies that the container uses the hadoop-base:3.3.6 image as its foundation, which includes Hadoop version 3.3.6.

- Health Check: The HEALTHCHECK instruction ensures the Node Manager is functioning properly by using the curl command to check if the Node Manager's web interface is accessible at http://localhost:8042/. If this check fails, the health status of the container is marked as unhealthy.

- Add Script: ADD run.sh /run.sh copies the run.sh script from the local build context into the container's root directory. This script will be used to start the Node Manager.

- Set Permissions: RUN chmod a+x /run.sh makes the run.sh script executable, ensuring it can be run when the container starts.

- Expose Port: EXPOSE 8042 opens port 8042, which is the default port for the Node Manager's web interface. This allows external access to the Node Manager's monitoring and management interface.

- Default Command: CMD ["/run.sh"] specifies that the container should execute the run.sh script by default when it starts. This script will initialize and start the Node Manager service.

The run.sh shell script contains these lines of code :

1#!/bin/bash 2 3# Kick-off nodemanager to manage namenode, datanode inside cluster 4# This is second level of resource manager 5# Nodemanager will manage resource hand in hand with yarn 6$HADOOP_HOME/bin/yarn --config $HADOOP_CONF_DIR nodemanager

$HADOOP_HOME/bin/yarn: This command invokes the YARN script located in the bin directory of the Hadoop installation. --config $HADOOP_CONF_DIR: This option specifies the configuration directory to be used by the YARN command. $HADOOP_CONF_DIR is an environment variable that points to the directory containing Hadoop's configuration files.

nodemanager: This argument tells the yarn command to start the Node Manager, which is responsible for managing and monitoring resources (CPU, memory, disk) on individual nodes within the cluster.

6. Hadoop Base Image

Now all the things are ready. The last thing we need to do is to create the Hadoop Base Image from the docker file.

1# Use the official OpenJDK 11 image as the base for the build 2FROM openjdk:11-jdk AS jdk 3 4# Use the official Python 3.11 image 5FROM python:3.11 6 7USER root 8 9# -------------------------------------------------------- 10# JAVA 11# -------------------------------------------------------- 12RUN apt-get update && apt-get install -y --no-install-recommends \ 13 python3-launchpadlib \ 14 software-properties-common && \ 15 apt-get clean && rm -rf /var/lib/apt/lists/* 16 17# Set the JAVA_HOME environment variable for the AMD64 architecture 18ENV JAVA_HOME=/usr/local/openjdk-11 19 20# Copy OpenJDK from the first stage 21COPY $JAVA_HOME $JAVA_HOME 22 23# -------------------------------------------------------- 24# HADOOP 25# -------------------------------------------------------- 26ENV HADOOP_VERSION=3.3.6 27ENV HADOOP_URL=https://downloads.apache.org/hadoop/common/hadoop-$HADOOP_VERSION/hadoop-$HADOOP_VERSION.tar.gz 28ENV HADOOP_PREFIX=/opt/hadoop-$HADOOP_VERSION 29ENV HADOOP_CONF_DIR=/etc/hadoop 30ENV MULTIHOMED_NETWORK=1 31ENV USER=root 32ENV HADOOP_HOME=/opt/hadoop-$HADOOP_VERSION 33ENV PATH $HADOOP_PREFIX/bin/:$PATH 34ENV PATH $HADOOP_HOME/bin/:$PATH 35 36RUN set -x \ 37 && curl -fSL "$HADOOP_URL" -o /tmp/hadoop.tar.gz \ 38 && tar -xvf /tmp/hadoop.tar.gz -C /opt/ \ 39 && rm /tmp/hadoop.tar.gz* 40 41RUN ln -s /opt/hadoop-$HADOOP_VERSION/etc/hadoop /etc/hadoop 42RUN mkdir /opt/hadoop-$HADOOP_VERSION/logs 43RUN mkdir /hadoop-data 44 45USER root 46 47COPY conf/core-site.xml $HADOOP_CONF_DIR/core-site.xml 48COPY conf/hdfs-site.xml $HADOOP_CONF_DIR/hdfs-site.xml 49COPY conf/mapred-site.xml $HADOOP_CONF_DIR/mapred-site.xml 50COPY conf/yarn-site.xml $HADOOP_CONF_DIR/yarn-site.xml

This Dockerfile defines a multi-stage build for setting up a Hadoop environment. Here's a breakdown of its components:

- Base Images:

- FROM openjdk:11-jdk AS jdk: The first stage uses the official OpenJDK 11 image as the base for installing Java Development Kit (JDK).FROM python:3.11: The second stage uses the official Python 3.11 image.

- JAVA Installation:

- The RUN command installs necessary packages for Java and cleans up the installation.ENV JAVA_HOME=/usr/local/openjdk-11: Sets the JAVA_HOME environment variable.COPY --from=jdk $JAVA_HOME $JAVA_HOME: Copies Java from the first stage to the second stage.

- HADOOP Installation:

- Specifies environment variables for Hadoop version, URLs, directories, and paths.Downloads and extracts Hadoop tarball.Sets up Hadoop configurations and directories.Copies configuration files (core-site.xml, hdfs-site.xml, mapred-site.xml, yarn-site.xml) into the Hadoop configuration directory.

Overall, this Dockerfile sets up a Hadoop environment on top of Python 3.11, using OpenJDK 11 for Java dependencies, and installs and configures Hadoop with the necessary configuration files.

As all our Dockerfiles are now complete we can create the docker-compose.yml

1version: "2.4" 2 3services: 4 namenode: 5 build: ./namenode 6 container_name: namenode 7 volumes: 8 - hadoop_namenode:/hadoop/dfs/name 9 - ./data/:/hadoop-data/input 10 - ./map_reduce/:/hadoop-data/map_reduce 11 environment: 12 - CLUSTER_NAME=test 13 ports: 14 - "9870:9870" 15 - "8020:8020" 16 networks: 17 - hadoop_network 18 19 resourcemanager: 20 build: ./resourcemanager 21 container_name: resourcemanager 22 restart: on-failure 23 depends_on: 24 - namenode 25 - datanode1 26 - datanode2 27 - datanode3 28 ports: 29 - "8089:8088" 30 networks: 31 - hadoop_network 32 33 historyserver: 34 build: ./historyserver 35 container_name: historyserver 36 depends_on: 37 - namenode 38 - datanode1 39 - datanode2 40 volumes: 41 - hadoop_historyserver:/hadoop/yarn/timeline 42 ports: 43 - "8188:8188" 44 networks: 45 - hadoop_network 46 47 nodemanager1: 48 build: ./nodemanager 49 container_name: nodemanager1 50 depends_on: 51 - namenode 52 - datanode1 53 - datanode2 54 ports: 55 - "8042:8042" 56 networks: 57 - hadoop_network 58 59 datanode1: 60 build: ./datanode 61 container_name: datanode1 62 depends_on: 63 - namenode 64 volumes: 65 - hadoop_datanode1:/hadoop/dfs/data 66 networks: 67 - hadoop_network 68 69 datanode2: 70 build: ./datanode 71 container_name: datanode2 72 depends_on: 73 - namenode 74 volumes: 75 - hadoop_datanode2:/hadoop/dfs/data 76 networks: 77 - hadoop_network 78 79 datanode3: 80 build: ./datanode 81 container_name: datanode3 82 depends_on: 83 - namenode 84 volumes: 85 - hadoop_datanode3:/hadoop/dfs/data 86 networks: 87 - hadoop_network 88 89volumes: 90 hadoop_namenode: 91 hadoop_datanode1: 92 hadoop_datanode2: 93 hadoop_datanode3: 94 hadoop_historyserver: 95 96networks: 97 hadoop_network: 98 name: hadoop_network 99 external: true

This Docker Compose configuration defines a multi-container environment for running a Hadoop cluster. Here's a breakdown of its components:

- Services: Specifies various containers for different components of the Hadoop cluster, including NameNode, ResourceManager, HistoryServer, NodeManagers, and DataNodes.

- Volumes: Defines volumes for persisting data, such as HDFS data directories and YARN timeline data.

- Networks: Creates a Docker network named "hadoop_network" for communication between containers.

Each service is built from a corresponding Dockerfile located in separate directories (namenode, resourcemanager, historyserver, nodemanager, datanode). Dependencies between services are specified using the depends_on directive, ensuring that containers are started in the correct order. Ports are exposed for accessing web interfaces of various Hadoop components.

Overall, this Docker Compose configuration simplifies the deployment and management of a Hadoop cluster environment.

In the end we can automate the process of building docker images and start running the images but start-cluster.sh file.

1#!/bin/bash 2 3docker network create hadoop_network 4 5docker build -t hadoop-base:3.3.6 . 6 7docker-compose up -d

This bash script performs a series of commands to set up a Hadoop cluster environment using Docker and Docker Compose. Here's a breakdown of each command:

- Create Docker Network: docker network create hadoop_network creates a Docker network named "hadoop_network". This network is used to facilitate communication between the containers within the Hadoop cluster.

- Build Docker Image: docker build -t hadoop-base:3.3.6 . builds a Docker image with the tag "hadoop-base:3.3.6" using the Dockerfile located in the current directory (.). This Docker image serves as the base image for the Hadoop cluster components.

- Start Docker Compose: docker-compose up -d starts the Docker Compose-defined services in detached mode (-d). This command reads the Docker Compose file in the current directory and starts the containers defined in it, setting up the entire Hadoop cluster environment.

Conclusion

You have successfully set up a Hadoop 3.3.6 multi-node cluster using WSL2, Docker, and Docker Compose. This setup provides a scalable and flexible environment for your big data processing needs.

Feel free to explore more configurations and optimizations for your specific use case.